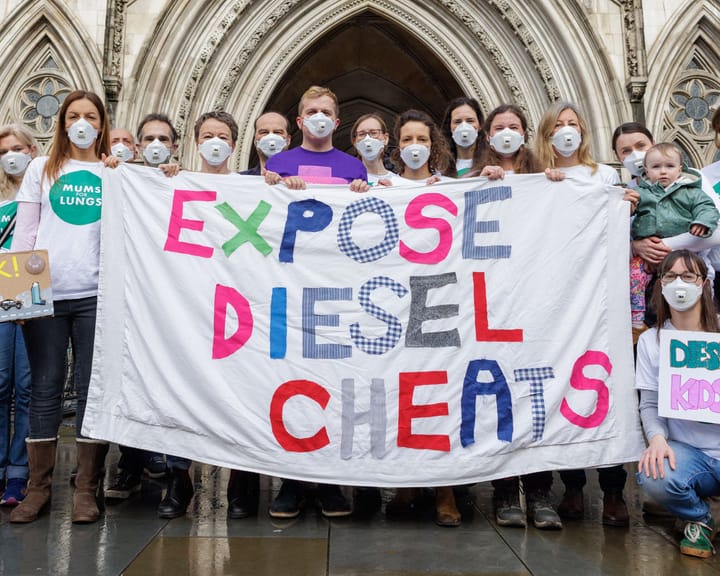

"Children's health at stake as Dieselgate fallout continues"

“Young lungs continue to suffer the consequences of Dieselgate daily,” says Jemima Hartshorn, who founded the campaign group Mums for Lungs. Her own child has faced severe respiratory issues, with some episodes so intense that she had to restrain her daughter to administer an inhaler.

It has been a decade